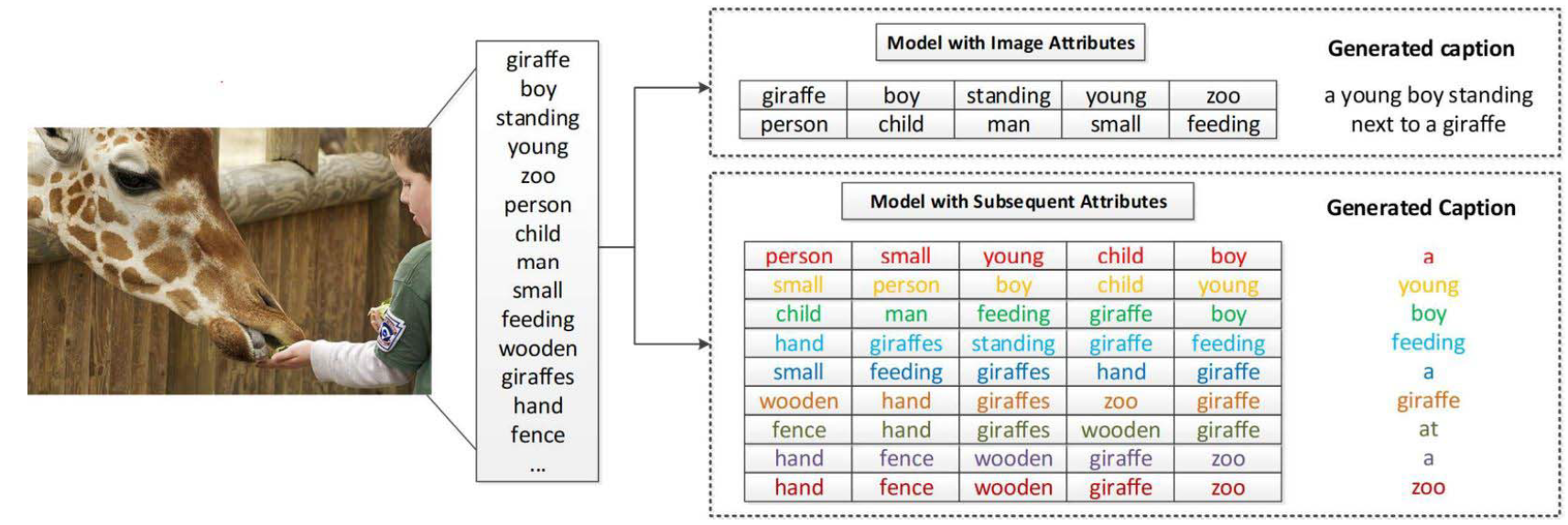

Image Captioning With End-to-End Attribute Detection and Subsequent Attributes Prediction

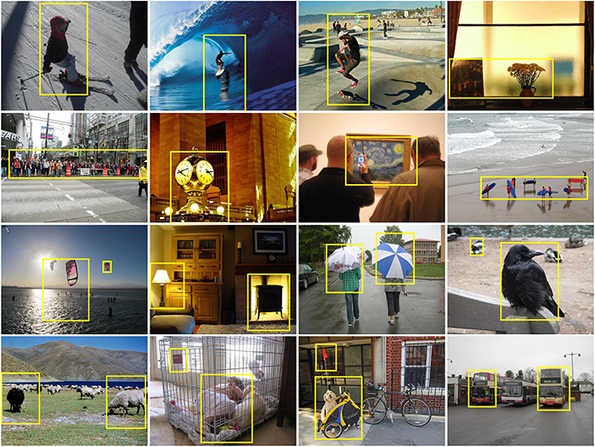

Abstract: Semantic attention has been shown to be effective in improving the performance of image captioning. The core of semantic attention based methods is to drive the model to attend to semantically important words, or attributes. In previous works, the attribute detector and the captioning network are usually independent, leading to the insufficient usage of the semantic information. Also, all the detected attributes, no matter whether they are appropriate for the linguistic context at the current step, are attended to through the whole caption generation process. This may sometimes disrupt the captioning model to attend to incorrect visual concepts. To solve these problems, we introduce two end-to-end trainable modules to closely couple attribute detection with image captioning as well as prompt the effective uses of attributes by predicting appropriate attributes at each time step. The multimodal attribute detector (MAD) module improves the attribute detection accuracy by using not only the image features but also the word embedding of attributes already existing in most captioning models. MAD models the similarity between the semantics of attributes and the image object features to facilitate accurate detection. The subsequent attribute predictor (SAP) module dynamically predicts a concise attribute subset at each time step to mitigate the diversity of image attributes. Compared to previous attribute based methods, our approach enhances the explainability in how the attributes affect the generated words and achieves a state-of-the-art single model performance of 128.8 CIDEr-D on the MSCOCO dataset. Extensive experiments on the MSCOCO dataset show that our proposal actually improves the performances in both image captioning and attribute detection simultaneously.

Publication:

- Yiqing Huang, Jiansheng Chen, Wanli Ouyang, Weitao Wan, and Youze Xue, Image Captioning With End-to-End Attribute Detection and Subsequent Attributes Prediction, IEEE Transactions on Image Processing, vol 29, pp. 4013-4026, 2020.

- Yiqing Huang, Cong Li, Tianpeng Li, Weitao Wan, Jiansheng Chen, Image Captioning with Attribute Refinement, IEEE International Conference on Image Processing (ICIP), Sept. 2019.

| image-captioning-with-mad-and-sap-master.zip | |

| File Size: | 41 kb |

| File Type: | zip |

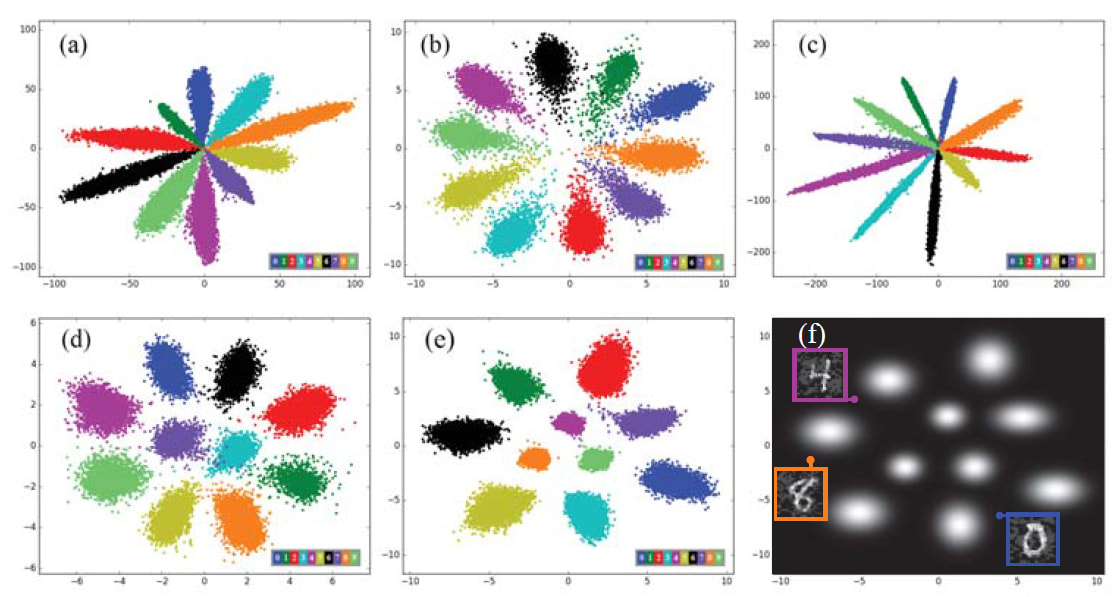

Rethinking Feature Distribution for Loss Functions in Image Classification

Abstract: We propose a large-margin Gaussian Mixture (L-GM) loss for deep neural networks in classification tasks. Different from the softmax cross-entropy loss, our proposal is established on the assumption that the deep features of the training set follow a Gaussian Mixture distribution. By involving a classification margin and a likelihood regularization, the L-GM loss facilitates both a high classification performance and an accurate modeling of the training feature distribution. As such, the L-GM loss is superior to the softmax loss and its major variants in the sense that besides classification, it can be readily used to distinguish abnormal inputs, such as the adversarial examples, based on their features’ likelihood to the training feature distribution. Extensive experiments on various recognition benchmarks like MNIST, CIFAR, ImageNet and LFW, as well as on adversarial examples demonstrate the effectiveness of our proposal.

Grant:

- National Natural Science Foundation of China Project (#61673234)

- Weitao Wan, Yuanyi Zhong, Tianpeng Li, Jiansheng Chen, Rethinking Feature Distribution for Loss Functions in Image Classification, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Jun. 2018.

| spotlight_ppt_weitaowan.pdf | |

| File Size: | 2061 kb |

| File Type: | |

| poster_weitaowan_cvpr2018.pdf | |

| File Size: | 1607 kb |

| File Type: | |

| l-gm-loss-master.zip | |

| File Size: | 9216 kb |

| File Type: | zip |

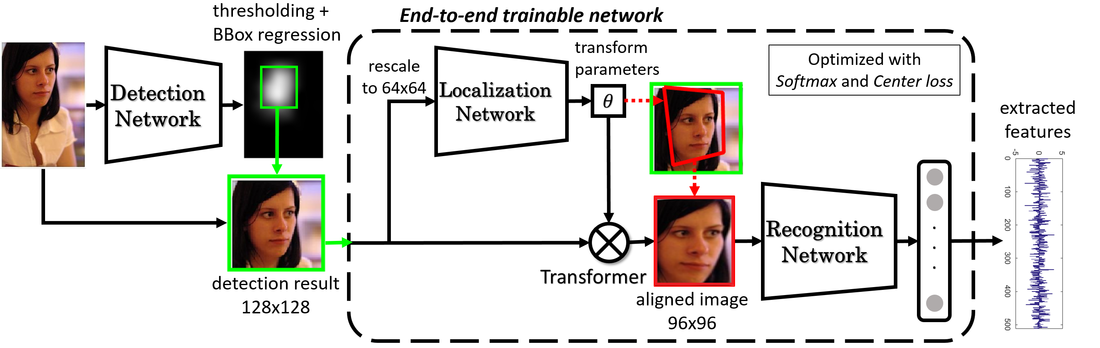

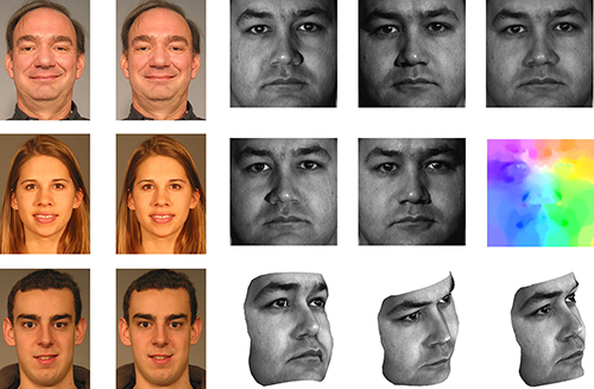

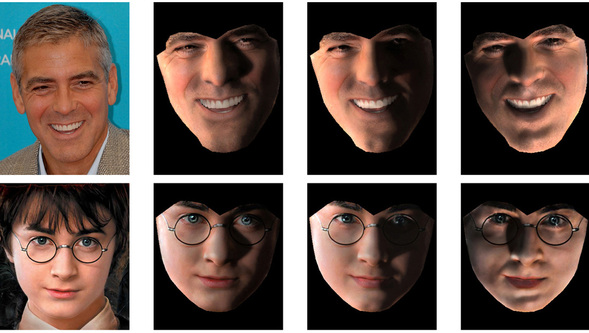

Toward End-to-End Face Recognition Through Alignment Learning

Abstract: A common practice in modern face recognition methods is to specifically align the face area based on the prior knowledge of human face structure before recognition feature extraction. The face alignment is usually implemented independently, causing difficulties in the designing of end-to-end face recognition models. We study the possibility of end-to-end face recognition through alignment learning in which neither prior knowledge on facial landmarks nor artificially defined geometric transformations are required. Only human identity clues are used for driving the automatic learning of appropriate geometric transformations for the face recognition task. Trained purely on publicly available datasets, our model achieves a verification accuracy of 99.33% on the LFW dataset, which is on par with state-of-the-art single model methods.

Grant:

- National Natural Science Foundation of China Project (#61673234)

- Yuanyi Zhong, Jiansheng Chen, Bo Huang, Toward End-to-End Face Recognition Through Alignment Learning, IEEE Signal Processing Letters, Vol. 24, No. 8, pp. 1213-1217, 2017.

| e2eface.pdf | |

| File Size: | 424 kb |

| File Type: | |

|

Code:

|

| ||||||||||||

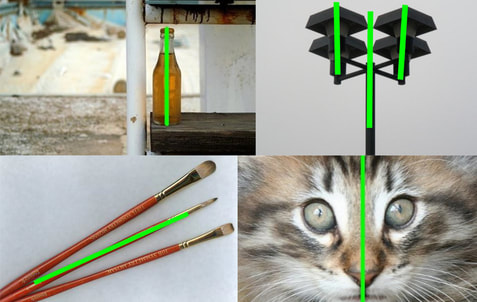

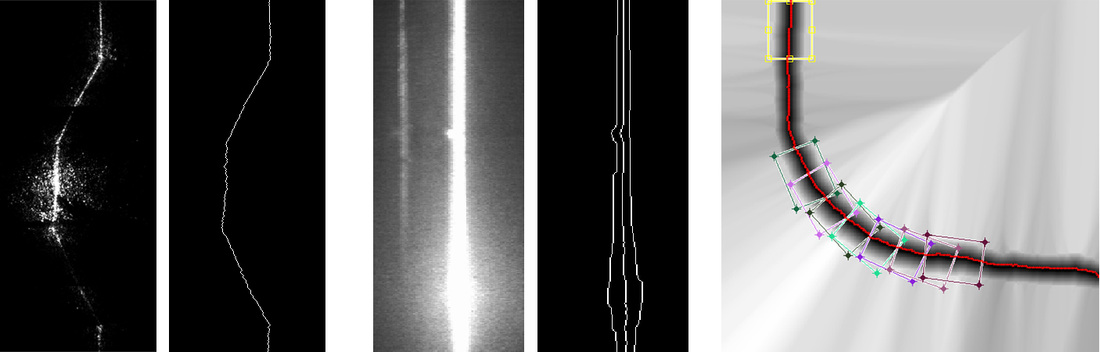

Palindrome based Symmetry Detection

Abstract: We developed a method searching for the main symmetric axis in an image based on the SAX representation and a classical linear time palindrome algorithm. This method generates a curve outlining the axis by dynamic programming and produces a straight axis by RANSAC fitting. The computational complexity is O(mn) on an m*n image, which is linear to the pixel number. This method can be extended to multiple symmetric axes detection. Comparing with state-ofthe- art symmetry detection methods, our method has a comparable precision and is much faster.

Grant:

- National Natural Science Foundation of China Project (#61101152)

- Tsinghua University Initiative Scientific Research Program Project (#20131089382)

- Beijing Higher Education Young Elite Teacher Project (#YETP0104)

- Shaosheng Liang, Jiansheng Chen, Zhengqin Li, Gaocheng Bai, Linear Time Symmetric Axis Search based on Palindrome Detection, International Conference on Image Processing, Sept. 2016.

| File Size: | 927 kb |

| File Type: | |

|

Code:

|

| ||||||

Automatic Image Cropping

Abstract: Attention based automatic image cropping aims at preserving the most visually important region in an image. A common task in this kind of method is to search for the smallest rectangle inside which the summed attention is maximized. We demonstrate that under appropriate formulations, this task can be achieved using efficient algorithms with low computational complexity. In a practically useful scenario where the aspect ratio of the cropping rectangle is given, the problem can be solved with a computational complexity linear to the number of image pixels. We also study the possibility of multiple rectangle cropping and a new model facilitating fully automated image cropping.

Grant:

- National Natural Science Foundation of China Project (#61101152)

- Tsinghua University Initiative Scientific Research Program Project (#20131089382)

- Beijing Higher Education Young Elite Teacher Project (#YETP0104)

- Jiansheng Chen, Gaocheng Bai, Zhengqin Li, and Shaoheng Liang, Automatic Image Cropping: A Computational Complexity Study, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Jun. 2016.

| File Size: | 1819 kb |

| File Type: | |

|

Code:

|

| ||||||

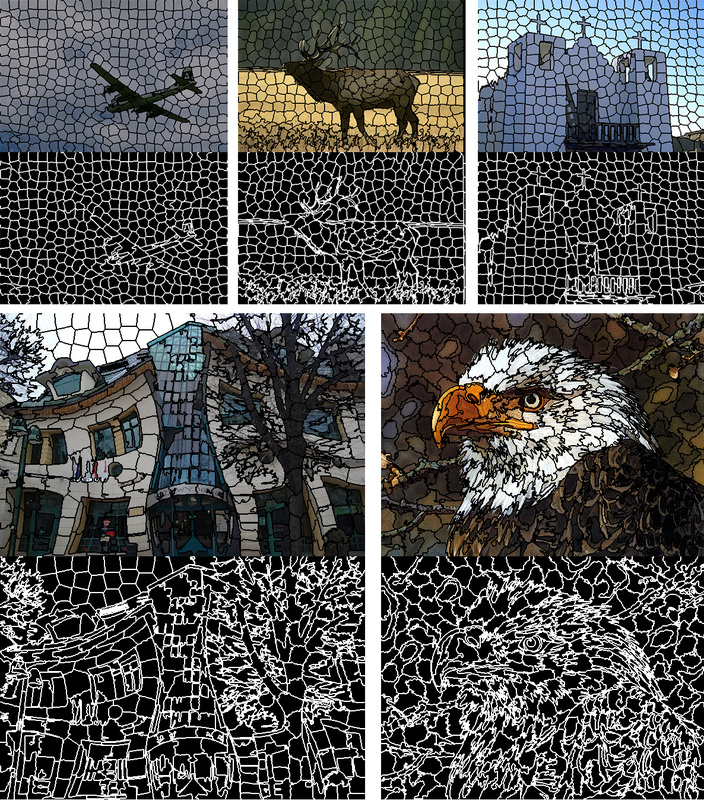

Linear Spectral Clustering Superpixel

Abstract: We present in this paper a superpixel segmentation algorithm called Linear Spectral Clustering (LSC), which produces compact and uniform superpixels with low computational costs. Basically, a normalized cuts formulation of the superpixel segmentation is adopted based on a similarity metric that measures the color similarity and space proximity between image pixels. However, instead of using the traditional eigen-based algorithm, we approximate the similarity metric using a kernel function leading to an explicitly mapping of pixel values and coordinates into a high dimensional feature space. We prove that by appropriately weighting each point in this feature space, the objective functions of weighted K-means and normalized cuts share the same optimum point. As such, it is possible to optimize the cost function of normalized cuts by iteratively applying simple K-means clustering in the proposed feature space. LSC is of linear computational complexity and high memory efficiency and is able to preserve global properties of images. Experimental results show that LSC performs equally well or better than state of the art superpixel segmentation algorithms in terms of several commonly used evaluation metrics in image segmentation.

Grant:

- National Natural Science Foundation of China Project (#61101152, #61673234)

- Tsinghua University Initiative Scientific Research Program Project (#20131089382)

- Beijing Higher Education Young Elite Teacher Project (#YETP0104)

- Zhengqin Li, Jiansheng Chen, Superpixel Segmentation using Linear Spectral Clustering, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Jun. 2015

- Jiansheng Chen, Zhengqin Li, Bo Huang, Linear Spectral Clustering Superpixel, IEEE Transactions on Image Processing, Vol. 26, No. 7, pp. 3317-3330, 2017.

| File Size: | 9111 kb |

| File Type: | |

|

Code:

|

| ||||||||||||

Face Quality

Abstract: Face image quality is an important factor affecting the accuracy of automatic face recognition. It is usually possible for practical recognition systems to capture multiple face images from each subject. Selecting face images with high quality for recognition is a promising stratagem for improving the system performance. We propose a learning to rank based framework for assessing the face image quality. The proposed method is simple and can adapt to different recognition methods. Experimental result demonstrates its effectiveness in improving the robustness of face detection and recognition.

Grant:

- National Natural Science Foundation of China Project (#61101152)

- Tsinghua University Initiative Scientific Research Program Project (#20131089382)

- Beijing Higher Education Young Elite Teacher Project (#YETP0104)

- Jiansheng Chen, Yu Deng, Gaocheng Bai and Guangda Su, Face Image Quality Assessment Based on Learning to Rank, IEEE Signal Processing Letters, Vol. 22, No. 1, pp. 90-94, 2015

|

Code:

|

| ||||||

Face Symmetry

Abstract: Human faces are highly but not precisely bilaterally symmetrical. We present in this letter a measurement of facial asymmetry based on the optical flow field between a face image and its bilaterally mirrored counterpart. We revisit the problem of facial asymmetry quantification and confirm some conclusions in previous research by applying the proposed asymmetry measurement on a dataset containing 4000 subjects. Moreover, the proposed measurement also contains information on facial asymmetry compensation and thus can be used to facilitate various face processing tasks such as face image beautification, 3D face reconstruction and face recognition. Experimental results show the flexibility and effectiveness of our proposal.

Grant:

- National Natural Science Foundation of China Project (#61101152)

- Tsinghua University Initiative Scientific Research Program Project (#20131089382)

- Jiansheng Chen, Chang Yang, Yu Deng, Gang Zhang and Guangda Su, Exploring Facial Asymmetry using Optical Flow, Signal Processing Letters, Vol. 21, No. 7, pp. 792-795, Jul. 2014

Code:

| faceasym.zip | |

| File Size: | 263 kb |

| File Type: | zip |

Welding Seam Exatraction

Abstract: Based on the understanding of the prior knowledge about the welding images, we present a welding seam tracking method using the image seam extraction technique. Owing to its optimisation nature, the proposed method is robust to strong image noises caused by environmental factors such as uneven illumination, light interferences and welding spatters. In addition, the proposed method is of low time complexity and is highly adaptive to different types of welding images.

Grant:

- National Natural Science Foundation of China Project (#61101152)

- Jiansheng Chen, Guangda Su and Shoubin Xiang, Robust Welding Seam Tracking using Image Seam Extraction, Science and Technology of Welding and Joining, Vol. 17, No. 2, pp.155-161 Feb. 2012.

|

Code:

|

| ||||||

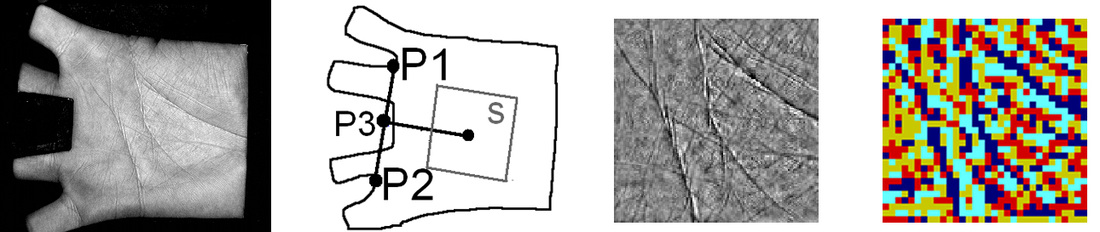

Palmprint Authentication

Abstract: We propose a new texture based approach for palmprint feature extraction, template representation and matching. An extension of the SAX (Symbolic Aggregate approXimation), a time series technology, to 2D data is the key to make this new approach effective, simple, flexible and reliable. Experiments show that by adopting the simple feature of gray scale information only, this approach can achieve an equal error rate of 0.3%, and a rank one identification accuracy of 99.9% on a 7752 palmprint public database. This new approach has very low computational complexity so that it can be efficiently implemented on slow mobile embedded platforms. The proposed approach doesn't rely on any parameter training process and therefore is fully reproducible.

Grant:

- Hong Kong Research Grant Concil Project (#415205)

- Jiansheng Chen, Yiu-Sang Moon, Ming-Fai Wong, Guangda Su, Palmprint Authentication Using a Symbolic Representation of Images, Image and Vision Computing, 28 (3), pp. 343-351, Mar., 2010.

- Jiansheng Chen, Y.S. Moon, H.W. Yeung, Palmprint Authentication Using Time Series, International Conference on Audio- and Video-Based Biometric Person Authentication, NY USA, 2005.

|

Code:

|

| ||||||

Face Image Relighting

Abstract: We propose a face image relighting method using locally constrained global optimization. Based on the empirical fact that common radiance

environments are locally homogeneous, we propose to use an optimization based solution in which local linear adjustments are performed on overlapping windows throughout the input image. As such, local textures and global smoothness of the input image can be preserved simultaneously when applying the illumination transformation.

Grant:

- Tsinghua Chuanxin Foundation Project (#110107001)

- Jiansheng Chen, Guangda Su, Jinping He, Shenglan Ben, "Face Image Relighting using Locally Constrained Global Optimization", European Conference on Computer Vision, Sept. 2010.

|

Code:

|

| ||||||

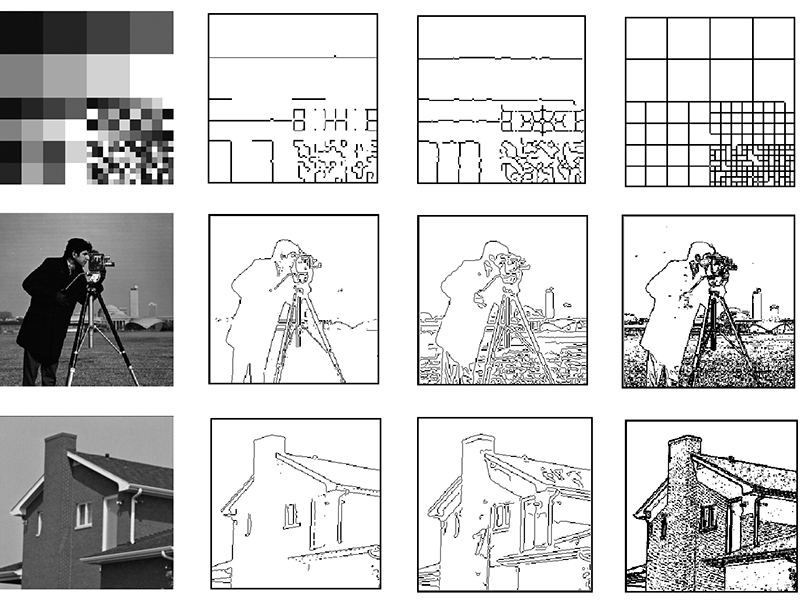

Edge Detection using Entropy

Abstract: We propose a simple and effective approach for edge detection using the image entropy defined on pixel grayscale values instead of the histogram. A strictly bounded function of local image entropy is designed for identifying abrupt changes of image intensity across edges. Mathematical properties of this function are analyzed to validate its applicability in the edge detection task. Edge pixels are segmented using a Pulse Coupled Neural Network in which the connectivity prior of edge pixels is used. Experimental results demonstrate that our method can robustly detect edges in synthetic as well as natural images.

Grant:

- Key Project of the Ministry of Public Security of China (#2005ZDGGQHDX005)

- National 973 Project of China (#2007CB310600)

- Jiansheng Chen, Jingping He, Guangda Su, "Combining Image Entropy with the Pulse Coupled Neural Network in Edge Detection", International Conference on Image Processing, Sept. 2010.

|

Code:

|

| ||||||